Social Media: Prospect and Challenges

SAHYOG must adhere to the safeguards and procedures in Section 69A of IT Act

From UPSC perspective, the following things are important :

Mains level: Regulation of online content;

Why in the News?

Social media platform X told the Delhi High Court that it cannot be forced to join the government’s SAHYOG portal, raising concerns that the portal might be misused to restrict online content.

What is the SAHYOG portal?

|

How does the government justify the creation of SAHYOG portal?

- Enhancing Law Enforcement Efficiency: The government argues that SAHYOG enables faster coordination between law enforcement agencies, social media platforms, and telecom providers to remove unlawful content swiftly. Example: During communal riots, law enforcement can quickly flag and remove misinformation that could incite violence.

- Legal Obligation Under IT Act: The government justifies SAHYOG under Section 79(3)(b) of the IT Act, which mandates that intermediaries remove content upon receiving government notification to retain their safe harbour protection. Example: If a government agency reports a post promoting terrorism, the platform must take it down to comply with the law.

- Court-Mandated Need for Real-Time Action: The government cites the Delhi High Court’s observation in Shabana vs Govt of NCT of Delhi and Ors., which highlighted the necessity of a real-time content removal mechanism to handle urgent cases. Example: In cases of child exploitation content, immediate action through SAHYOG ensures rapid takedown and prevents further harm.

Why has X (formerly Twitter) challenged the SAHYOG portal in the Delhi High Court?

- Existence of an Independent Mechanism: X asserts that it has its own system to process valid legal requests for content removal and cannot be compelled to join the SAHYOG portal.

- Legal Concerns Over Parallel Mechanisms: The company argues that the SAHYOG portal creates a parallel content removal mechanism without the stringent legal safeguards outlined in Section 69A of the Information Technology Act, 2000.

- Potential for Unchecked Censorship: X is concerned that the portal could lead to unrestrained censorship by allowing multiple government officials to issue content removal orders without proper oversight.

How does Section 79(3)(b) of the IT Act differ from Section 69A in terms of content takedown provisions?

| Aspect | Section 79(3)(b) | Section 69A |

| Nature of Obligation |

|

|

| Who Issues Takedown Orders? |

|

|

| Legal Safeguards & Due Process |

|

|

| Scope of Application |

|

|

| Example Scenarios |

|

|

Who are the key stakeholders involved in the SAHYOG portal’s implementation and legal challenge?

- Government Authorities: The Ministry of Home Affairs (MHA) developed the SAHYOG portal to enhance coordination between law enforcement agencies and social media platforms for combating cybercrime. The portal aims to automate the process of sending notices to intermediaries for the removal or disabling of unlawful online content.

- Social Media Platforms (Intermediaries): Companies like X Corp (formerly Twitter) are directly impacted by the portal’s operations. X Corp has legally challenged the government’s use of the SAHYOG portal, arguing that it functions as a censorship tool by bypassing established legal safeguards and infringing upon constitutional rights such as freedom of speech.

- Judiciary: The Delhi High Court plays a pivotal role in adjudicating disputes related to the SAHYOG portal. It has urged various states, union territories, and intermediaries to join the portal to effectively combat cybercrime, while also addressing grievances from law enforcement agencies regarding data access from intermediaries.

Where does the Supreme Court’s ruling in Shreya Singhal vs Union of India come into play in the debate over SAHYOG?

- Precedent on Online Free Speech & Due Process: The Shreya Singhal ruling struck down Section 66A of the IT Act for being vague and overbroad, while upholding Section 69A with due process requirements, including hearings for content creators. Example: A journalist’s tweet flagged via SAHYOG may be removed without an opportunity to challenge it, violating Shreya Singhal principles.

- Judicial Safeguards & Preventing Arbitrary Censorship: Shreya Singhal upheld Section 69A but mandated transparent procedures, review committees, and justifications for content blocking. Example: If SAHYOG bulk blocks dissenting voices without an independent review, it could breach Shreya Singhal safeguards.

Way forward:

- Ensure Judicial Oversight & Accountability – Implement an independent review mechanism to prevent arbitrary censorship and align with the Shreya Singhal ruling.

- Enhance Transparency & Due Process – Mandate clear guidelines, periodic transparency reports, and an appeal system for content takedown decisions.

Mains PYQ:

Question: Discuss Section 66A of IT Act, with reference to its alleged violation of Article 19 of the Constitution. [UPSC 2013]

Linkage: This question linked with regulation of online content and the potential restrictions on freedom of speech and expression guaranteed by Article 19 of the Constitution. This is relevant because content takedown provisions are also a form of regulating online speech and need to be consistent with constitutional rights.

Get an IAS/IPS ranker as your 1: 1 personal mentor for UPSC 2024

Social Media: Prospect and Challenges

AI Content Detectors to Combat Deepfakes

From UPSC perspective, the following things are important :

Prelims level: Emerging Technologies; Deepfake Technology;

Why in the News?

During the General Elections 2024, the proliferation of AI-generated content (AIGC), including deepfake videos featuring prominent figures like Aamir Khan and Ranveer Singh, raised concerns about misinformation.

What is Deepfake Technology?

- It is a type of Artificial Intelligence used to create convincing images, audio and video hoaxes. Deepfakes often transform existing source content where one person is swapped for another.

- Creating such content involves a technique known as Generative Adversarial Networks (GANs), comprising Artificial Neural Networks.

Legal Safeguards in India:

|

Significance of Deepfake Technology:

- Promotes Right to Expression: Deepfakes amplify voices of marginalised individuals, enabling them to share important messages. Recently, a video was created to deliver the final message of a journalist killed by the Saudi government, calling for justice.

- Can contribute to the Education System: Online educators use deepfakes to bring historical figures to life for engaging lessons. For example, a video of Abraham Lincoln delivering his Gettysburg.

- Provides Autonomy: Deepfakes empower individuals to control their digital identity and explore new forms of self-expression. For instance, the Reface App.

- Provides a realistic experience: Artists leverage deepfakes for creative expression and collaboration, as seen in Salvador Dali’s interactive museum promotion. Deepfake tech enables realistic lip-syncing for actors speaking different languages, enhancing global accessibility and immersion in films.

- Renovating old memories: Deepfakes aid in restoring old photos, enhancing low-quality footage, and creating realistic training materials for public safety.

What are the limitations of Deepfake Technology?

- Spreading False Information: Deepfakes can purposefully spread misinformation, influencing public opinion or elections, like the videos of politicians/celebrities can manipulate viewers and create confusion about important issues.

- Frauds: Deepfake technology enables impersonation for financial frauds, tricking individuals into revealing sensitive information. They can also fuel harassment, especially targeting women, and lead to psychological distress.

- Accuracy: While no AI detector guarantees 100% accuracy, tools like Originality.ai boast a 99% true positive rate. Detection models report probability scores, allowing for nuanced assessments despite inherent uncertainties.

Future Scope:

- Adversarial AI: Keeping pace with evolving generative AI models poses a significant challenge for content detectors.

- Accessibility and Cost: With increased adoption and advancements, the accessibility and affordability of detection tools are expected to improve.

PYQ:With the present state of development, Artificial Intelligence can effectively do which of the following? (2020) 1. Bring down electricity consumption in industrial units 2. Create meaningful short stories and songs 3. Disease diagnosis 4. Text-to-Speech Conversion 5. Wireless transmission of electrical energy Select the correct answer using the code given below: (a) 1, 2, 3 and 5 only (b) 1, 3 and 4 only (c) 2, 4 and 5 only (d) 1, 2, 3, 4 and 5 |

Get an IAS/IPS ranker as your 1: 1 personal mentor for UPSC 2024

Social Media: Prospect and Challenges

The democratic political process is broken

From UPSC perspective, the following things are important :

Prelims level: NA

Mains level: Role of Media in civil society

Why in the news?

Due to the loss of credibility, many institutional news media struggle to establish a factual foundation or maintain control over diverse social narratives, affecting society, media principles, and the Political milieu in India.

The Present Scenario of Discourse in News Media:

- Institutional Crises: Loss of credibility in institutional news media leads to a lack of establishment of factual baseline and narrative control. Without credibility, news media struggles to maintain authority and trust, hindering its role in shaping public discourse.

- Impact on Public Discourse: The rise of social media has decentralized content creation and dissemination. Virality, rather than substance, becomes the primary measure of content value. Prioritization of engagement over quality and veracity distorts public discourse.

- Hyper-partisanship in Media: Loss of credibility in mainstream media contributes to hyper-partisanship. News and content are utilized as tools to promote factional interests rather than fostering dialogue and deliberation. Lack of interest in genuine discourse further exacerbates divisions within society.

- Fragmentation of Attention: The proliferation of media channels leads to the fragmentation of collective attention. A constant stream of transient content makes issues appear less significant. Gaining visibility and capturing attention becomes paramount, overshadowing the importance of substantive dialogue.

- Individual Battles and Tribal Affiliation: Public discourse becomes a battleground for individual interests seeking attention and reinforcing tribal affiliations. Lack of genuine dialogue hampers the evolution of consensus, further polarizing society.

Present Scenario of Discourse in Civil Society:

- Increase in Dependency: Liberal civil society increasingly directs its efforts towards engaging with the state and its institutions. Dependency on the state for functioning compromises civil society’s autonomy and independence.

- Legitimacy Issues: Civil society’s legitimacy is now derived more from normative purity than representativeness. This shift undermines civil society’s ability to truly represent diverse viewpoints and reconcile conflicting interests.

- Undermining Societal issues: Civil society becomes more inclined towards single-issue campaigns rather than engaging in broader negotiation and consensus-building. This narrow focus limits its effectiveness in addressing complex societal issues.

- Bypassing Political Processes: Civil society groups tend to bypass political processes and opt for institutional interventions, such as judicial or bureaucratic avenues, to advance their agendas. This strategy may sideline democratic processes and undermine the role of elected representatives in decision-making.

The Present Scenario of Discourse in Political Parties:

- Internal Focus of Political Parties: Political parties often prioritize internal issues over broader deliberations and policy formulation. This internal focus detracts from the party’s ability to engage in constructive dialogue and address pressing societal issues.

- Unable to play a role: Elected representatives are expected to translate constituency issues into a policy agenda. However, within the party setup, they often lack the power and inclination to do so effectively.

- Uncertain Electoral Payoff: Elected representatives may prioritize direct interventions for constituent services over influencing the policy agenda due to uncertain electoral benefits.

- Complex Electoral Dynamics: Elections involve a mix of constituency, state, and national issues, making it challenging for representatives to effectively represent their constituents’ interests. Candidates often rely heavily on party symbols for electoral success, diminishing the significance of individual policy agendas.

- Power Dynamics within Parties: Decision-makers for party tickets hold significant power within political parties, influencing candidate selection and party direction. Limited institutional positions of power lead to internal power struggles and sycophancy among aspirants.

Way Forward:

- Rebuilding Credibility: Implement measures to enhance transparency and accountability within news organizations. Encourage fact-checking and adherence to journalistic standards. Promote diversity of perspectives in news reporting to rebuild trust with diverse audiences.

- Regulation for Social Media Platforms: Implement regulations to combat misinformation and promote responsible content sharing. Foster partnerships between social media companies and fact-checking organizations to verify information.

- Promote Digital Literacy: Invest in education and public awareness campaigns to enhance media literacy among citizens. Equip individuals with critical thinking skills to discern credible sources from misinformation. Foster a culture of skepticism and verification when consuming news and information online.

- Encouraging Civil Society Engagement: Provide support for civil society initiatives that promote inclusivity and dialogue among diverse stakeholders. Enhance funding and resources for civil society organizations to reduce dependency on the state and encourage autonomy.

- Facilitate Political Dialogue and Reform: Encourage political parties to prioritize policy formulation and public deliberation over internal politics. Reform electoral systems to reduce the influence of party symbols and empower individual candidates with policy agendas.

Conclusion: The broken democratic process is exacerbated by media credibility loss, civil society’s state dependency, and internal party issues. Rebuilding media trust, regulating social media, promoting dialogue, and empowering civil society is crucial for restoration.

Mains PYQ-

Q- How do pressure groups influence Indian political process? Do you agree with this view that informal pressure groups have emerged as powerful than formal pressure groups in recent years? ( UPSC IAS/2017 )

Q- Can Civil Society and Non-Governmental Organisations present an alternative model of public service delivery to benefit the common citizen? Discuss the challenges of this alternative model.(UPSC IAS/2021)

Get an IAS/IPS ranker as your 1: 1 personal mentor for UPSC 2024

Social Media: Prospect and Challenges

Centre notifies Fact-Check Unit to screen online content

From UPSC perspective, the following things are important :

Prelims level: Legal liability protection, Fact check unit

Mains level: IT Rules, 2021

Why in the news?

The Ministry of Electronics and Information Technology has designated the Press Information Bureau’s Fact Check Unit to point out misinformation about Central government departments on social media platforms ahead of the election.

Context-

- According to the IT Rules of 2021, social media platforms might lose their legal protection from being held responsible for content posted by users if they decide to keep the misinformation flagged by the Fact Check Unit.

Background of this news-

- Due to the controversy surrounding the concept, the Union government had delayed officially notifying the Fact Check Unit as there was ongoing litigation at the Bombay High Court challenging the provision.

- However, this month, the court decided not to prolong a temporary halt that prevented the government from implementing the rules.

Key points as per IT Rules, 2021-

- Mandates: In essence, the IT Rules (2021) demand that social media platforms exercise heightened diligence concerning the content present on their platforms. Legal obligation on intermediaries to make reasonable efforts to prevent users from uploading such content.

- Appoint a Grievance Officer: Social media platforms are mandated to set up a grievance redressal mechanism and promptly remove unlawful and inappropriate content within specified timeframes.

- Ensuring Online Safety and Dignity of Users: Intermediaries are obligated to remove or disable access within 24 hours upon receiving complaints about content that exposes individuals’ private areas, depicts them in full or partial nudity, shows them engaged in sexual acts, or involves impersonation, including morphed images

- Informing users about privacy policies is crucial: Social media platforms’ privacy policies should include measures to educate users about refraining from sharing copyrighted material and any content that could be considered defamatory, racially or ethnically offensive, promoting pedophilia, or threatening the unity, integrity, defense, security, or sovereignty of India or its friendly relations with foreign states, or violating any existing laws.

Fake news on social media can have several negative impacts on governments-

- Undermining Trust- Fake news can erode public trust in government institutions and officials. When false information spreads widely, it can lead to scepticism and doubt about the government’s credibility.

- Destabilizing Democracy- Misinformation can distort public perceptions of government policies and actions, potentially leading to unrest, protests, or even violence. This can destabilize democratic processes and undermine the functioning of government.

- Manipulating Public Opinion- Fake news can be strategically used to manipulate public opinion in favour of or against a particular government or political party. By spreading false narratives, individuals or groups can influence elections and policymaking processes.

- Impeding Policy Implementation- False information circulating on social media can create confusion and resistance to government policies and initiatives. This can impede the effective implementation of programs and reforms.

- Wasting Resources- Governments may be forced to allocate resources to address the fallout from fake news, such as conducting investigations, issuing clarifications, or combating disinformation campaigns. This diverts resources away from other important priorities.

- Fueling Division- Fake news can exacerbate social and political divisions within a country by spreading divisive narratives or inciting hatred and hostility towards certain groups or communities. This can further polarize society and hinder efforts towards unity and cohesion

Measures to Tackle Fake News on Social Media:

- Mandatory Fact-Checking: Implement a requirement for social media platforms to fact-check content before dissemination.

- Enhanced User Education: Promote media literacy and critical thinking skills to help users discern reliable information from fake news.

- Strengthened Regulation: Enforce stricter regulations on social media platforms to curb the spread of misinformation and hold them accountable for content moderation.

- Collaborative Verification: Foster partnerships between governments, fact-checking organizations, and social media platforms to verify the accuracy of information.

- Transparent Algorithms: Ensure transparency in algorithms used by social media platforms to prioritize content, reducing the spread of false information.

- Swift Removal of Violative Content: Establish mechanisms for prompt removal of fake news and penalize users or entities responsible for spreading it.

- Public Awareness Campaigns: Launch campaigns to raise awareness about the detrimental effects of fake news and promote responsible sharing practices.

Conclusion: To address misinformation, governments should enforce IT Rules (2021), empower fact-checking units, and promote media literacy. Collaboration between authorities, platforms, and citizens is vital for combating fake news and upholding democratic values.

Get an IAS/IPS ranker as your 1: 1 personal mentor for UPSC 2024

Social Media: Prospect and Challenges

Centre bans 18 OTT Platforms for Inappropriate Content

From UPSC perspective, the following things are important :

Prelims level: Laws governing OTT Ban

Mains level: Read the attached story

In the news

- The Information & Broadcasting Ministry has blocked 18 OTT platforms on the charge of publishing obscene and vulgar content.

How were these platforms banned?

- The contents listed on the OTT platforms was found to be prima facie violation of:

- Section 67 and 67A of the Information Technology Act, 2000;

- Section 292 of the Indian Penal Code; and

- Section 4 of the Indecent Representation of Women (Prohibition) Act, 1986.

- These platforms were violative of the responsibility to not propagate obscenity, vulgarity and abuse under the guise of ‘creative expression’.

How are OTT Platforms regulated in India?

- Regulatory Framework: The Information Technology (Guidelines for Intermediaries and Digital Media Ethics Code) Rules, 2021 introduce a Code of Ethics applicable to digital media entities and OTT platforms.

- Key Provisions: These guidelines encompass content categorization, parental controls, adherence to journalistic norms, and the establishment of a grievance redressal mechanism to address concerns.

[A] Content Regulations

- Age-Based Classification: OTT platforms like Netflix and Amazon Prime are mandated to classify their content into five age-based categories: U (universal), 7+, 13+, 16+, and A (adult).

- Parental Locks: Effective parental locks must be implemented for content classified as 13+, ensuring that parents can control access to age-inappropriate material.

- Age Verification: Robust age verification systems are required for accessing adult content, enhancing parental oversight and safeguarding minors from exposure to inappropriate material.

[B] Grievance Redressal Mechanism

- Three-Tier System: A comprehensive grievance redressal mechanism consisting of three tiers has been established:

-

- Level-I: Publishers are encouraged to engage in self-regulation to address grievances and concerns internally.

- Level-II: A self-regulating body, headed by a retired judge from the Supreme Court or High Court or an eminent independent figure, will oversee complaints and ensure impartial resolution.

- Level-III: The Ministry of Information and Broadcasting will formulate an oversight mechanism and establish an inter-departmental committee tasked with addressing grievances. This body possesses the authority to censor and block content when necessary.

[C] Selective Banning of OTT Communication Services

- Parliamentary Notice: Concerns about the influence and impact of OTT communication services prompted a notice from a Parliamentary Standing Committee to the Department of Telecom (DoT).

- Scope of Discussion: This discussion focuses exclusively on OTT communication services such as WhatsApp, Signal, Meta (formerly Facebook), Google Meet, and Zoom, excluding content-based OTTs like Netflix or Amazon Prime.

- Regulatory Authority: Content regulation within OTT communication services falls under the jurisdiction of the Ministry of Information and Broadcasting (MIB), emphasizing the government’s commitment to ensuring responsible communication practices.

Get an IAS/IPS ranker as your 1: 1 personal mentor for UPSC 2024

Social Media: Prospect and Challenges

As we enter election year, let us not be defined by our politics — but our kindness

From UPSC perspective, the following things are important :

Prelims level: K-shaped recovery

Mains level: importance of looking beyond personal interests and extending kindness to others.

Get an IAS/IPS ranker as your 1: 1 personal mentor for UPSC 2024

Social Media: Prospect and Challenges

Broadcast regulation 3.0, commissions and omissions

From UPSC perspective, the following things are important :

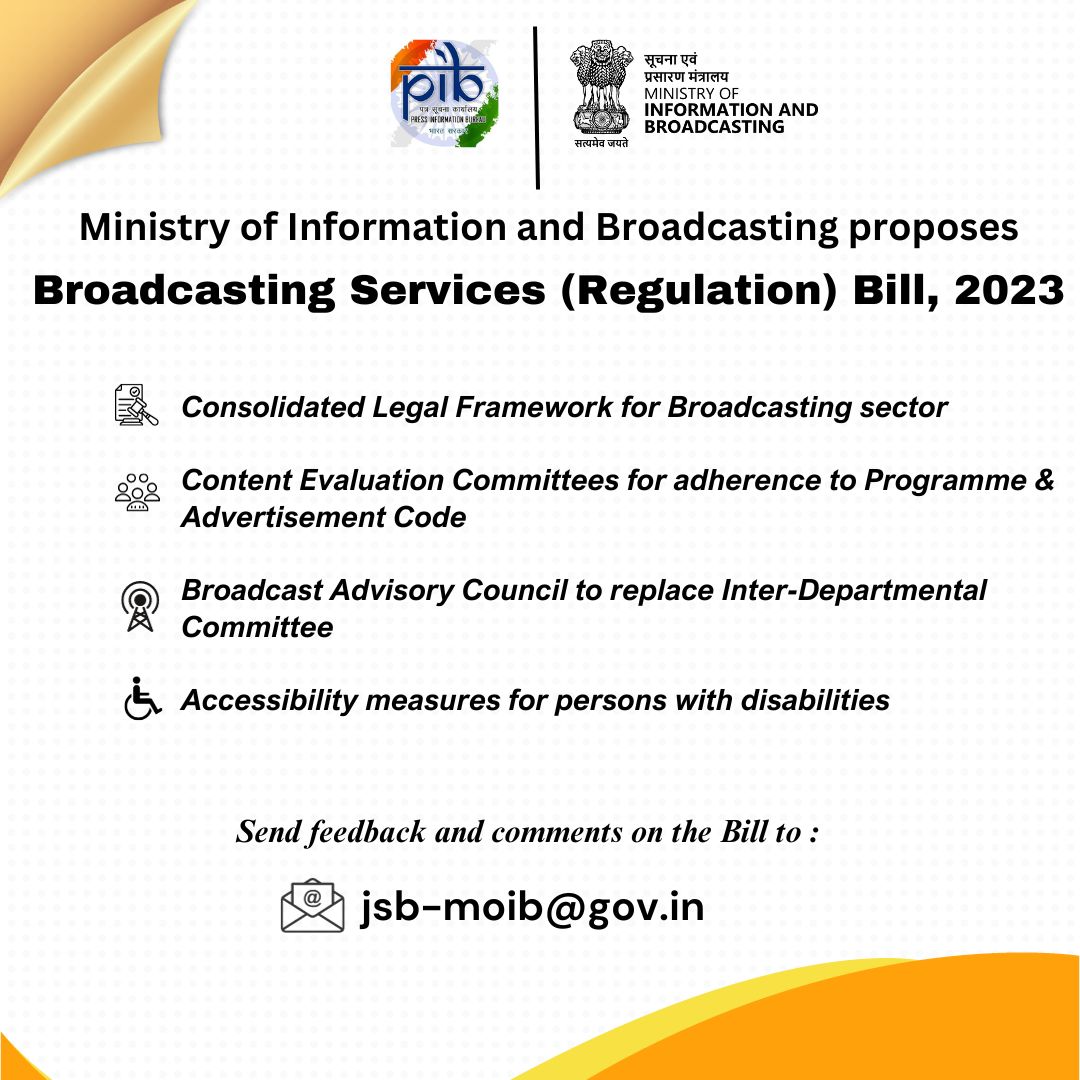

Prelims level: Broadcast Advisory Council

Mains level: press freedom and diversity

Central idea

India’s Broadcasting Services Bill aims at regulating broadcasting comprehensively, introducing positive steps like audience data transparency and competition in terrestrial broadcasting. However, concerns arise over privacy, jurisdictional conflicts with OTT regulation, and lack of measures on ownership and an independent regulator.

Key Highlights:

- The Broadcasting Services (Regulation) Bill aims to regulate broadcasting comprehensively, marking the third attempt since 1997.

- Positive propositions include obligations for record-keeping, audience measurement transparency, and allowing private actors in terrestrial broadcasting.

Key Concerns:

- Lack of privacy safeguards for subscriber and audience data in data collection practices.

- Inclusion of Over-the-Top (OTT) content suppliers in the definition of broadcasting creates jurisdictional conflicts and poses threats to smaller news outlets.

Positive Provisions Requiring Refinement:

- Obligation for maintaining records of subscriber data.

- Stipulation of a methodology for audience measurement.

- Provision to permit private actors in terrestrial broadcasting.

Apprehensions:

- Expanded definition of broadcasting may limit conditions for journalists and news outlets not part of large television networks.

- The mandate for a ‘Content Evaluation Committee’ to self-certify news programming raises feasibility and desirability concerns.

Crucial Silences in the Bill:

- Lack of measures to assess cross-media and vertical ownership impacts diversity in the news marketplace.

- Absence of provisions for creating an independent broadcast regulator.

Government Empowerment and Intrusive Mechanisms:

- The Bill grants the government leeway to inspect broadcasters without prior intimation, impound equipment, and curtail broadcasting in “public interest.”

- Violations of the Programme Code and Advertisement Code could result in deleting or modifying content.

Concerns Regarding Broadcast Advisory Council:

- Doubts about the Council’s capacity to address grievances raised by over 800 million TV viewers.

- Lack of autonomy for the Council, as the Central government has the ultimate decision-making authority.

Key Terms and Phrases:

- Over-the-Top (OTT) content suppliers

- National Broadcasting Policy

- Content Evaluation Committee

- Vertical integration

- Broadcast Advisory Council.

Key Statements:

- Privacy concerns arise due to the Bill’s lack of guardrails for subscriber and audience data collection practices.

- The absence of measures to assess cross-media and vertical ownership impacts the diversity of news suppliers.

- The Bill’s silence on creating an independent broadcast regulator is a significant omission.

Key Examples and References:

- The Bill is part of a series of attempts to regulate broadcasting, following initiatives in 1997 and 2007.

- TRAI’s ‘National Broadcasting Policy’ proposes including OTT content suppliers in the definition of broadcasting services.

Key Facts and Data:

- Lack of specifics on cross-media and vertical ownership in the Bill impedes diversity in the news marketplace.

- No provisions for an independent broadcast regulator, with the proposal for a ‘Broadcast Advisory Council.’

Critical Analysis:

- The potential positive provisions of the Bill require refinement, particularly concerning privacy protection and oversight bodies for news outlets.

- Intrusive mechanisms grant significant power to the government, posing concerns about press freedom and external pressure on news suppliers.

Way Forward:

- The Bill must address jurisdictional conflicts, incorporate privacy safeguards, and reconsider intrusive provisions for effective and balanced regulation.

- Protection of press freedom and diversity should be prioritized through fine-tuning potentially positive provisions and addressing omissions.

Get an IAS/IPS ranker as your 1: 1 personal mentor for UPSC 2024

Social Media: Prospect and Challenges

The challenge of maritime security in the Global South

From UPSC perspective, the following things are important :

Prelims level: India's Maritime Vision 2030

Mains level: Blue Economy: Sustainable use of ocean resources for economic development

Central idea

The article underscores the evolving challenges in the maritime domain, emphasizing the shift from traditional military approaches to a developmental model for maritime security. It highlights the need for collaboration among developing nations to address unconventional threats, such as illegal fishing and climate change, while acknowledging the reluctance to prioritize collective action over political and strategic autonomy.

Key Highlights:

- Evolution of Maritime Challenges: New dimensions in hard security challenges, including asymmetrical tactics and grey-zone warfare. Use of land attack missiles and combat drones reshaping the security landscape.

- Shift in Demand for Maritime Security: Growing demand from states facing unconventional threats such as illegal fishing, natural disasters, and climate change. Need for a broader approach beyond military means to address diverse maritime challenges.

- India’s Developmental Approach: Maritime Vision 2030 focuses on economic growth and livelihood generation through port, shipping, and inland waterway development. Indo-Pacific Oceans Initiative with seven pillars, including maritime ecology, marine resources, and disaster risk reduction.

New Threats in Maritime Domain:

- Recent developments include Ukraine’s asymmetrical tactics and China’s maritime militias, indicating a shift to improvised strategies.

- Emerging threats involve grey-zone warfare, land attack missiles, and combat drones.

Demand for Maritime Security:

- Majority of recent demand stems from unconventional threats like illegal fishing, natural disasters, and climate change.

- Addressing these challenges requires commitment of capital, resources, and specialized personnel.

Global South’s Perspective:

- Developing nations perceive Indo-Pacific competition among powerful nations as detrimental to their interests.

- Challenges involve interconnected objectives in national, environmental, economic, and human security.

Challenges in Global South:

- Rising sea levels, marine pollution, climate change disproportionately impact less developed states, leading to vulnerability.

- Unequal law-enforcement capabilities and lack of security coordination hinder joint efforts against maritime threats.

Creative Models for Maritime Security:

- Maritime security transcends military actions, focusing on generating prosperity and meeting societal aspirations.

- India’s Maritime Vision 2030 emphasizes port, shipping, and inland waterway development for economic growth.

- Dhaka’s Indo-Pacific document and Africa’s Blue Economy concept align with a developmental approach.

Fight Against Illegal Fishing:

- Significant challenge in Asia and Africa marked by a surge in illegal, unreported, and unregulated fishing.

- Faulty policies encouraging destructive methods like bottom trawling and seine fishing contribute to the problem.

India’s Indo-Pacific Oceans Initiative:

- Encompasses seven pillars, including maritime ecology, marine resources, capacity building, and disaster risk reduction.

- Advocates collective solutions for shared problems, garnering support from major Indo-Pacific states.

Challenges in Achieving Consensus:

- Implementation of collaborative strategy faces hurdles in improving interoperability, intelligence sharing, and establishing a regional rules-based order.

- Balancing sovereignty and strategic independence remains a priority for many nations, hindering consensus.

Key Challenges:

- Complexity of Unconventional Threats: Conventional military approaches insufficient; requires capital, resources, and specialist personnel. Challenges include illegal fishing, marine pollution, human trafficking, and climate change.

- Global South’s Coordination Challenges: Unequal law-enforcement capabilities and lack of security coordination among littoral states. Reluctance to prioritize collective action due to varying security priorities and autonomy concerns.

- Vulnerability of Less Developed States: Disproportionate impact of rising sea levels, marine pollution, and climate change on less developed states. Vulnerability stemming from inadequate resources to combat environmental and security challenges.

- Lack of Consensus and Reluctance: Reluctance among littoral states to pursue concrete solutions and collaborate. Paradox of non-traditional maritime security, where collective issues clash with political and strategic autonomy.

Key Terms and Phrases:

- Grey-Zone Warfare: Tactics that fall between peace and war, creating ambiguity in conflict situations.

- Asymmetrical Tactics: Strategies that exploit an opponent’s weaknesses rather than confronting strengths directly.

- Maritime Vision 2030: India’s 10-year blueprint for economic growth in the maritime sector.

- Blue Economy: Sustainable use of ocean resources for economic development.

- Indo-Pacific Oceans Initiative: India’s initiative with pillars like maritime ecology, marine resources, and disaster risk reduction.

- IUU Fishing: Illegal, unreported, and unregulated fishing.

- Bottom Trawling and Seine Fishing: Destructive fishing methods contributing to illegal fishing.

Key Examples and References:

- Ukraine’s Asymmetrical Tactics: Utilization of unconventional strategies in the Black Sea.

- China’s Maritime Militias: Deployment in the South China Sea as an example of evolving threats.

- India’s Maritime Vision 2030: Illustrates a developmental approach to maritime security.

- Illegal Fishing in Asia and Africa: Rising challenge with negative environmental and economic impacts.

Key Facts and Data:

- Maritime Vision 2030: India’s 10-year plan for the maritime sector.

- Indo-Pacific Oceans Initiative: Seven-pillar initiative for collective solutions in the maritime domain.

Critical Analysis:

- Shift to Developmental Model: Emphasis on generating prosperity and meeting human aspirations in addition to traditional security measures.

- Comprehensive Maritime Challenges: Recognition of diverse challenges beyond military threats, including environmental and economic goals.

- Littoral State Reluctance: Paradox in the Global South, where collective issues clash with autonomy, hindering collaborative solutions.

Way Forward:

- Collaborative Strategies:Improved interoperability, intelligence sharing, and agreement on regional rules-based order.

- Prioritizing Collective Action: Developing nations must prioritize collective action over sovereignty for effective maritime solutions.

- Sustainable Development Goals: Prioritize sustainable development goals in littoral states, addressing challenges such as illegal fishing and climate change.

Get an IAS/IPS ranker as your 1: 1 personal mentor for UPSC 2024

Social Media: Prospect and Challenges

Rashmika Mandanna’s deepfake: Regulate AI, don’t ban it

From UPSC perspective, the following things are important :

Prelims level: deepfake

Mains level: Discussions on Deepfakes

Central idea

The article highlights challenges in deepfake regulation using the example of the Rashmika Mandanna video. It calls for a balanced regulatory approach, citing existing frameworks like the IT Act, and recommends clear guidelines, public awareness, and potential amendments in upcoming legislation such as the Digital India Act to effectively tackle deepfake complexities.

What is deepfake?

- Definition: Deepfake involves using advanced artificial intelligence (AI), particularly deep learning algorithms, to create manipulated content like videos or audio recordings.

- Manipulation: It can replace or superimpose one person’s likeness onto another, making it appear as though the targeted individual is involved in activities they never participated in.

- Concerns: Deepfakes raise concerns about misinformation, fake news, and identity theft, as the technology can create convincing but entirely fabricated scenarios.

- Legitimate Use: Despite concerns, deepfake technology has legitimate uses, such as special effects in the film industry or anonymizing individuals, like journalists reporting from sensitive or dangerous situations.

- Sophistication Challenge: The increasing sophistication of AI algorithms makes it challenging to distinguish between genuine and manipulated content.

Key Highlights:

- Deepfake Impact: The article discusses the impact of deepfake technology, citing the example of a viral video of actor Rashmika Mandanna, which turned out to be a deepfake.

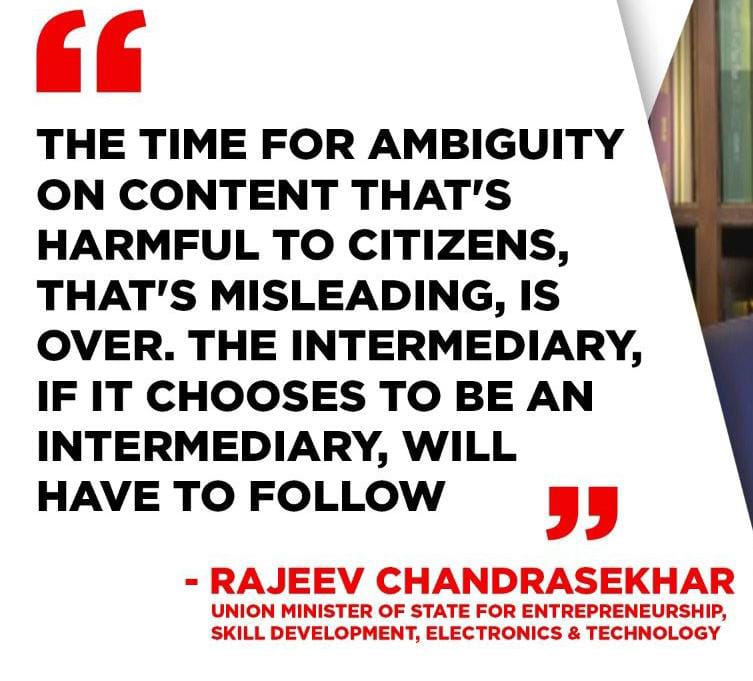

- Regulatory Responses: It explores different approaches to regulate deepfakes, highlighting the need for a balanced response that considers both AI and platform regulation. Minister Rajeev Chandrasekhar’s mention of regulations under the IT Act is discussed.

- Legitimate Uses: The article recognizes that while deepfakes can be misused for scams and fake videos, there are also legitimate uses, such as protecting journalists in oppressive regimes.

Challenges:

- Regulatory Dilemma: The article points out the challenge of finding a balanced regulatory approach, acknowledging the difficulty in distinguishing between lawful and unlawful uses of deepfake technology.

- Detection Difficulty: Advancements in AI have made it increasingly difficult to detect deepfake videos, posing a threat to individuals depicted in such content and undermining trust in video evidence.

- Legal Ambiguities: The article highlights legal ambiguities around deepfakes, as creating false content is not inherently illegal, and distinguishing between obscene, defamatory, or satirical content can be challenging.

Key Facts:

- The article mentions the viral deepfake video of Rashmika Mandanna and its impact on the debate surrounding deepfake regulations.

- It highlights the challenges in detecting the new generation of almost indistinguishable deepfakes.

Government Actions:

- Legal Frameworks in Action: The Indian government relies on the Information Technology (IT) Act to regulate online content. For instance, platforms are obligated to remove unlawful content within specific timeframes, demonstrating an initial approach to content moderation.

- Policy Discussions on Deepfakes: Policymakers are actively engaging in discussions regarding amendments to the IT Act to explicitly address deepfake-related challenges. This includes considerations for adapting existing legal frameworks to the evolving landscape of AI-generated content.

What more needs to be done:

- Legislative Clarity for Platforms: Governments should provide explicit guidance within legislative frameworks, instructing online platforms on the prompt identification and removal of deepfake content. For instance, specifying mechanisms to ensure compliance with content moderation obligations within stringent timelines.

- AI Regulation Example: Develop targeted regulations for AI technologies involved in deepfake creation. China’s approach, requiring providers to obtain consent from individuals featured in deepfakes, serves as a specific example. Such regulations could be incorporated into existing legal frameworks.

- Public Awareness Campaigns: Drawing inspiration from successful public awareness initiatives in other domains, governments can implement campaigns similar to those addressing cybersecurity. These campaigns would educate citizens about the existence and potential threats of deepfakes, empowering them to identify and report such content.

- Global Collaboration Instances: Emphasizing the need for global collaboration, governments can cite successful instances of information-sharing agreements. For example, collaboration frameworks established between countries to combat cyber threats could serve as a model for addressing cross-border challenges posed by deepfakes.

- Technological Innovation Support: Encourage research and development by providing grants or incentives for technological solutions. Specific examples include initiatives that have successfully advanced cybersecurity technologies, showcasing the government’s commitment to staying ahead of evolving threats like deepfake.

Way Forward:

- Multi-pronged Regulatory Response: The article suggests avoiding reactionary calls for specialized regulation and instead opting for a comprehensive regulatory approach that addresses both AI and platform regulation.

- Digital India Act: The upcoming Digital India Act is seen as an opportunity to address deepfake-related issues by regulating AI, emerging technologies, and online platforms.

Get an IAS/IPS ranker as your 1: 1 personal mentor for UPSC 2024

Social Media: Prospect and Challenges

Meta lawsuits: Big Tech will always be bad for mental health

From UPSC perspective, the following things are important :

Prelims level: Dopamine

Mains level: The problem with social media and its business model

Central idea

The article delves into the social media crisis, pointing fingers at Meta for exacerbating youth mental health issues through Instagram’s addictive features. Legal actions highlight the platforms’ intentional exploitation of young users’ vulnerabilities. To address this, a suggested solution is contemplating a shift from the current profit-driven business model to a subscription-based one.

Key Highlights:

- Social Media Crisis: Social media platforms, especially Meta (formerly Facebook), are facing a crisis due to concerns about their impact on mental health, particularly among youth.

- Legal Action Against Meta: Forty-two US Attorney Generals have filed lawsuits against Meta, alleging that Instagram, a Meta-owned platform, actively contributes to a youth mental health crisis through addictive features.

- Allegations Against Meta: The lawsuit claims that Meta knowingly designs algorithms to exploit young users’ dopamine responses, creating an addictive cycle of engagement for profit.

- Dopamine and Addiction: Dopamine, associated with happiness, is triggered by likes on platforms like Facebook, leading to heightened activity in children’s brains, making them more susceptible to addictive behaviors.

Prelims focus – Dopamine

|

Key examples for mains value addition

- The Social Dilemma (2020): A Netflix show that revealed how social media, led by Meta, messes with our minds and influences our behavior, especially impacting the mental health of youngsters.

- Frances Haugen’s Revelations: A whistleblower exposed internal Meta documents showing that Instagram worsened body image issues for teen girls, making social media’s impact on mental health a serious concern.

- US Surgeon General’s Advisory: The government’s health expert issued a warning about the negative effects of social media on young minds, emphasizing its importance in President Biden’s State of the Union address.

Challenges:

- Addictive Business Model: The core issue with social media is its business model, focusing on user engagement and data monetization, potentially at the expense of user well-being.

- Transformation from Networks to Media: Social networks, initially built for human connection, have transformed into media properties where users are treated as data for advertisers, impacting their habits and behaviors.

- Global Regulatory Scrutiny: Meta faces regulatory challenges beyond the US, with UK, EU, and India considering legislative measures. India, having the largest Instagram user base, emphasizes accountability for content hosted on platforms.

Analysis:

- Business Model Critique: The article argues that the problem with social media lies in its business model, which prioritizes user engagement for data collection and monetization.

- Regulatory Consequences: If the lawsuit succeeds, Meta could face significant penalties, potentially adding up to billions of dollars, and signaling a major setback for the company.

- Global Impact: Regulatory scrutiny extends beyond the US, indicating a need for platforms to be more accountable and responsible for their content and user interactions on a global scale.

Key Data:

- Potential Penalties: Meta could face penalties of up to $5000 for each violation if the lawsuit succeeds, posing a significant financial threat considering Instagram’s large user base.

- Regulatory Pressure in India: India, with 229 million Instagram users, emphasizes the end of a free pass for platforms, signaling a global shift towards increased accountability.

Way Forward:

- Shift to Subscription Model: The article suggests that social networks might consider adopting a subscription model, akin to OpenAI’s approach, to prioritize user well-being over advertising revenue.

- Listen to Regulatory Signals: Platforms are urged to heed regulatory signals and work collaboratively to address issues rather than adopting a confrontational stance.

- Long-term Survival: To ensure long-term survival, social media networks may need to reevaluate their business models, aligning them with user well-being rather than prioritizing engagement and data monetization.

In essence, the article highlights the crisis in social media, legal challenges against Meta, the critique of the business model, global regulatory scrutiny, and suggests potential shifts in the industry’s approach for long-term survival.

Get an IAS/IPS ranker as your 1: 1 personal mentor for UPSC 2024

Social Media: Prospect and Challenges

TRAI can’t regulate OTT platforms: TDSAT

From UPSC perspective, the following things are important :

Prelims level: TRAI

Mains level: OTT Regulations

Central Idea

- The Telecom Disputes Settlement and Appellate Tribunal (TDSAT) has issued an interim order clarifying that Over the Top (OTT) platforms, such as Hotstar, fall outside the jurisdiction of the Telecom Regulatory Authority of India (TRAI).

- Instead, they are governed by the Information Technology Rules, 2021, established by the Ministry of Electronics and Information Technology (MeitY).

Context for TDSAT’s Decision

- The All India Digital Cable Federation (AIDCF) initiated the petition, alleging that Star India’s free streaming of ICC Cricket World Cup matches on mobile devices through Disney+ Hotstar is discriminatory under TRAI regulations.

- This is because viewers can only access matches on Star Sports TV channels by subscribing and making monthly payments.

Diverging Opinions on OTT Regulation

- IT Ministry vs. DoT: The IT Ministry contends that internet-based communication services, including OTT platforms, do not fall under the jurisdiction of the DoT, citing the Allocation of Business Rules.

- DoT’s Draft Telecom Bill: The DoT proposed a draft telecom Bill that classifies OTT platforms as telecommunications services and seeks to regulate them as telecom operators. This move has encountered objections from MeitY.

TRAI’s Attempt at OTT Regulation

- Changing Stance: TRAI, after three years of maintaining that no specific regulatory framework was required for OTT communication services, began consultations on regulating these services.

- Consultation Paper: In June, TRAI released a consultation paper seeking input on regulating OTT services and exploring whether selective banning of OTT services could be considered as an alternative to complete Internet shutdowns.

- Telecom Operators’ Demand: Telecom operators have long advocated for “same service, same rules” and have pushed for regulatory intervention for OTT platforms.

Significance of TDSAT’s Order

- TDSAT decision holds significance due to ongoing debates over the regulation of OTT services.

- TRAI and the Department of Telecommunications (DoT) have been attempting to regulate OTT platforms, while the Ministry of Electronics and Information Technology opposes these efforts.

Recommendations and Monitoring

- In September 2020, TRAI recommended against regulatory intervention for OTT platforms, suggesting that market forces should govern the sector.

- However, it also emphasized the need for monitoring and intervention at an “appropriate time.”

Conclusion

- The recent TDSAT ruling on OTT platform jurisdiction adds complexity to the ongoing debate over the regulation of these services in India.

- While TRAI and the DoT seek regulatory measures, the IT Ministry contends that such services fall outside the purview of telecommunications regulation.

- The evolving landscape highlights the need for a nuanced approach to balance the interests of various stakeholders, including telecom operators, government authorities, and the broader public.

Get an IAS/IPS ranker as your 1: 1 personal mentor for UPSC 2024

Social Media: Prospect and Challenges

Fediverse: Understanding Decentralized Social Networking

From UPSC perspective, the following things are important :

Prelims level: Fediverse

Mains level: NA

Central Idea

- Meta, the parent company for Facebook, Instagram, and WhatsApp, has launched Threads, a Twitter rival, which is set to become a part of the fediverse.

- While Meta’s move has garnered attention, the company is yet to reveal its plans for utilizing the fediverse to build a decentralized social network.

What is the Fediverse?

- Network of Servers: The fediverse is a group of federated social networking services that operate on decentralized networks using open-source standards.

- Third-Party Servers: It comprises a network of servers run by third parties, not controlled by any single entity. Social media platforms can utilize these servers to facilitate communication between their users.

- Cross-Platform Communication: Users on social media platforms within the fediverse can seamlessly communicate with users of other platforms within the network, eliminating the need for separate accounts for each platform.

- Media Platforms Using: Meta’s Threads is set to join the fediverse, along with other platforms like Pixelfed (photo-sharing), PeerTube (decentralized video-sharing), Lemmy, Diaspora, Movim, Prismo, WriteFreely, and more.

Benefits of Using the Fediverse

- Decentralized Nature: Social media platforms adopt the fediverse to leverage its decentralized nature, giving users more control over the content they view and interact with.

- Cross-Platform Communications: The fediverse enables easier communication between users of different social media platforms within the network.

- Data Portability: Users can freely transport their data to other platforms within the fediverse, ensuring greater flexibility and control over their online data.

Challenges Hindering Wider Adoption

- Scalability: Decentralized servers might face challenges in handling large amounts of traffic, leading to potential scalability issues.

- Content Moderation: The decentralized nature of the fediverse poses difficulties in implementing and enforcing uniform content moderation policies across servers.

- Data Privacy: Enforcing data privacy policies becomes more challenging since data posted on one server might not be deleted due to differing data deletion policies on other servers.

The Fediverse’s Evolution

- Long-standing Idea: The concept of the fediverse has been around for decades, with attempts made by companies like Google to embrace decentralized networks.

- Emergence of Notable Platforms: Platforms like Identi.ca (founded in 2008) and Mastodon and Pleroma (emerged in 2016) have contributed to the development of the fediverse.

- ActivityPub Protocol: In 2018, the W3 (World Wide Web Consortium) introduced the ActivityPub protocol, a commonly used protocol in applications within the fediverse.

Get an IAS/IPS ranker as your 1: 1 personal mentor for UPSC 2024

Social Media: Prospect and Challenges

Section 69 (A) of IT Act

From UPSC perspective, the following things are important :

Prelims level: Section 69A of IT Act

Mains level: Not Much

Central Idea

- The Indian government has exercised its powers under Section 69(A) of the Information Technology Act, 2000.

- It requested Twitter and other social media platforms to remove a video depicting the naked parade and sexual assault of two Manipur women.

What is Section 69(A) of the IT Act?

- Empowering Content Takedown: Section 69(A) allows the government to issue content-blocking orders to online intermediaries like ISPs, web hosting services, search engines, etc.

- Grounds for Blocking: Content can be blocked if it is considered a threat to India’s national security, sovereignty, public order, or friendly relations with foreign states, or if it incites the commission of cognizable offenses.

- Review Committee: Requests made by the government for blocking content are sent to a review committee, which issues the necessary directions. Such orders are typically kept confidential.

Supreme Court’s Verdict on Section 69(A)

- Striking Down Section 66A: In the case of Shreya Singhal vs. Union of India (2015), the Supreme Court struck down Section 66A of the IT Act, which penalized the sending of offensive messages through communication services.

- Section 69(A) Validated: The Court upheld the constitutionality of Section 69(A) of the Information Technology Rules 2009, noting that it is narrowly drawn and includes several safeguards.

- Limited Blocking Authority: The Court emphasized that blocking can only be carried out if the Central Government is satisfied about its necessity, and the reasons for blocking must be recorded in writing for legal challenges.

Other Rulings on Section 69(A)

- Twitter’s Challenge: Twitter approached the Karnataka High Court in July last year, contesting the Ministry of Electronics and Information Technology’s (MeitY) content-blocking orders issued under Section 69(A).

- Court’s Dismissal: In July of this year, the single-judge bench of the Karnataka HC dismissed Twitter’s plea, asserting that the Centre has the authority to block tweets.

- Extending Blocking Powers: Justice Krishna D Dixit ruled that the Centre’s blocking powers extend not only to single tweets but to entire user accounts as well.

Conclusion

- The application of Section 69(A) has been a subject of legal and societal debate, as it aims to balance national security and public order concerns with the protection of free speech and expression.

Get an IAS/IPS ranker as your 1: 1 personal mentor for UPSC 2024

Social Media: Prospect and Challenges

Why TRAI wants to regulate WhatsApp, similar services

From UPSC perspective, the following things are important :

Prelims level: TRAI

Mains level: Regulating OTT communication services, necessity and challenges

Central Idea

- In a surprising move, the TRAI is reconsidering its previous stance on regulating OTT communication services such as WhatsApp, Zoom, and Google Meet. Almost three years after advising against a specific regulatory framework for these services, TRAI has released a consultation paper, inviting stakeholders to provide suggestions on regulating OTT services.

What is Telecom Regulatory Authority of India (TRAI)?

- TRAI is an independent regulatory body established by the Government of India to regulate and promote telecommunications and broadcasting services in the country.

- TRAI’s primary mandate is to ensure fair competition, protect consumer interests, and facilitate the growth and development of the telecom industry in India.

- TRAI performs various functions to fulfill its objectives, including formulating regulations and policies, issuing licenses to telecom service providers, monitoring compliance with regulations, resolving disputes, promoting fair competition, and conducting research and analysis in the telecom sector.

- TRAI also acts as an advisory body to the government on matters related to telecommunications and broadcasting.

What is Over-the-top (OTT)?

- OTT refers to the delivery of audio, video, and other media content over the internet directly to users, bypassing traditional distribution channels such as cable or satellite television providers.

- OTT communication services offer users the ability to make voice and video calls, send instant messages, and engage in group chats using internet-connected devices.

- Examples of popular OTT services include video streaming platforms like Netflix, Amazon Prime Video, and Disney+, music streaming services like Spotify and Apple Music, communication apps like WhatsApp and Skype, and social media platforms like Facebook and Instagram.

Growing complexity of regulating Internet services

- Rapid Technological Advancements: The Internet landscape is constantly evolving, with new technologies, platforms, and services emerging regularly which makes it challenging for regulators to keep up with the latest developments and their potential implications.

- Convergence of Services: Traditionally distinct services such as telecommunications, broadcasting, and information technology are converging in the digital realm. Internet services now encompass a wide range of functionalities, including communication, entertainment, e-commerce, social networking, and more.

- Global Nature of the Internet: The Internet transcends national boundaries, making it difficult to implement uniform regulations across jurisdictions. Different countries have varying approaches to Internet governance, privacy laws, content regulation, and data protection.

- Privacy and Data Protection: The collection, storage, and use of personal data by Internet services have raised concerns about privacy and data protection.

- Content Moderation and Fake News: The rise of social media and user-generated content platforms has brought forth challenges related to content moderation, misinformation, and disinformation. Regulators are grappling with issues of freedom of speech, ensuring responsible content practices, and combatting the spread of fake news and harmful content online.

Why is TRAI exploring selective banning of OTT apps?

- Economic Ramifications: Shutting down telecommunications or the entire Internet can have significant negative consequences for a country’s economy. By exploring selective banning of OTT apps, TRAI aims to mitigate the economic ramifications while still addressing concerns related to specific apps or content.

- Technological Challenges: Traditional methods of blocking websites or apps may face challenges when dealing with dynamic IP addresses and websites hosted on cloud servers. Advanced techniques and encryption protocols like HTTPS make it difficult for service providers to block or filter content at the individual app level. Despite these challenges, TRAI believes that it is still possible to identify and block access to specific websites or apps through network-level filtering or other innovative methods.

- Parliament Committee Recommendation: TRAI’s exploration of selective banning of OTT apps aligns with the recommendation made by the Parliamentary Standing Committee on IT. The committee suggested that targeted blocking of specific websites or apps could be a more effective approach compared to a blanket ban on the entire Internet.

Why it is necessary to regulate OTT communication services?

- Consumer Protection: Regulations can help ensure consumer protection by establishing standards for privacy, data security, and user rights. OTT communication services handle vast amounts of personal data and facilitate sensitive conversations, making it crucial to have safeguards in place to protect user privacy and secure their data from unauthorized access or misuse.

- Quality and Reliability: By establishing minimum service standards, authorities can ensure that users have consistent and reliable access to communication services, minimizing disruptions and service outages.

- National Security: OTT communication services play a significant role in everyday communication, including personal, business, and government interactions. Ensuring national security interests may require regulatory oversight to address issues like lawful interception capabilities, preventing misuse of services for illegal activities, and maintaining the integrity of critical communications infrastructure.

- Level Playing Field: Regulatory measures aim to create a level playing field between traditional telecom operators and OTT service providers. Regulating OTT communication services can address the perceived disparity in obligations and promote fair competition among different service providers.

- Public Interest and Social Responsibility: OTT communication services have become integral to societal functioning, enabling education, healthcare, business communication, and more. Regulations can ensure that these services operate in the public interest and uphold social responsibilities. For example, regulations can address issues like combating misinformation, hate speech, or harmful content on these platforms.

Conclusion

- TRAI’s decision to revisit its stance on regulating OTT communication services reflects the evolving dynamics of the Internet industry. The consultation paper and the draft telecom Bill highlight the need for regulatory parity and financial considerations in this sector. As stakeholders provide suggestions, it remains to be seen how TRAI will strike a balance between regulating OTT services and fostering innovation in the digital landscape

Also read:

Fake News: Addition of The Provision In Intermediary Guidelines

Get an IAS/IPS ranker as your 1: 1 personal mentor for UPSC 2024

Social Media: Prospect and Challenges

A case of unchecked power to restrict e-free speech

From UPSC perspective, the following things are important :

Prelims level: Related provisions and important Judgements

Mains level: Channing prospects of freedom of speech and expression

Central idea

- The recent judgment by the Karnataka High Court dismissing Twitter’s challenge to blocking orders issued by the Ministry of Electronics and Information Technology (MeitY) raises serious concerns about the erosion of free speech and unchecked state power. By imposing an exorbitant cost on Twitter and disregarding established procedural safeguards, the judgment sets a worrisome precedent for content takedowns and hampers the exercise of digital rights.

*Relevance of the topic

The concerns raised in the Karnataka High Court judgment are in contrast to the principles established in the Shreya Singhal case.

Highly relevant with the principles of natural justice and expanded scope of online speech and expression

Concerns raised over the judgement

- Ignorance of Procedural Safeguards: The court’s interpretation undermines the procedural safeguards established under the Information Technology Act, 2000, and the Blocking Rules of 2009. By disregarding the requirement to provide notice to users and convey reasons for blocking, the judgment enables the state to restrict free speech without proper oversight, leading to potential abuse of power.

- Unchecked State Power: The judgment grants the state unchecked power in taking down content without following established procedures. This lack of oversight raises concerns about potential misuse and arbitrary blocking of content, which could lead to the suppression of dissenting voices and curtailment of free speech rights.

- Expansion of Grounds for Restricting Speech: The court’s reliance on combating “fake news” and “misinformation” as grounds for blocking content goes beyond the permissible restrictions on free speech under Article 19(2) of the Constitution. This expansion of grounds for blocking content raises concerns about subjective interpretations and the potential for suppressing diverse viewpoints and dissent.

- Chilling Effect on Free Speech: The acceptance of wholesale blocking of Twitter accounts without specific justification creates a chilling effect on free speech. This can deter individuals from expressing their opinions openly and engaging in meaningful discussions, ultimately inhibiting democratic discourse and stifling freedom of expression.

- Deviation from Judicial Precedent: The judgment deviates from the precedent set by the Supreme Court in the Shreya Singhal case, which upheld the constitutionality of Section 69A while emphasizing the importance of procedural safeguards.

Shreya Singhal case for example

- The Shreya Singhal case is a landmark judgment by the Supreme Court of India that has significant implications for freedom of speech and expression online.

- In this case, the Supreme Court struck down Section 66A of the Information Technology Act, 2000, as unconstitutional on grounds of violating the right to freedom of speech and expression guaranteed under Article 19(1)(a) of the Constitution.

- The judgment in the Shreya Singhal case is significant in the context of freedom of speech and expression because it reinforces several principles:

- Overbreadth and Vagueness: The court emphasized that vague and overly broad provisions that can be interpreted subjectively may lead to a chilling effect on free speech. Section 66A, which allowed for the punishment of online speech that caused annoyance, inconvenience, or insult, was considered vague and prone to misuse, leading to the restriction of legitimate expression.

- Requirement of Procedural Safeguards: The Supreme Court highlighted the importance of procedural safeguards to protect freedom of speech. It stated that any restriction on speech must be based on clear and defined grounds and must be accompanied by adequate procedural safeguards, including the provision of notice to the affected party and the opportunity to be heard.

- Need for a Direct Nexus to Public Order: The judgment reiterated that restrictions on speech should be based on specific grounds outlined in Article 19(2) of the Constitution. It emphasized that there must be a direct nexus between the speech and the threat to public order, and mere annoyance or inconvenience should not be a ground for restriction.

Its impact on freedom of speech and expression

- Undermining Freedom of Speech: The judgment undermines freedom of speech and expression by allowing the state to exercise unchecked power in taking down content without following established procedures. This grants the state the ability to curtail speech and expression without proper justification or recourse for affected parties.

- Prior Restraint: The judgment’s acceptance of wholesale blocking of Twitter accounts, without targeting specific tweets, amounts to prior restraint on freedom of speech. This restricts future speech and expression, contrary to the principles established by the Supreme Court.

- Lack of Procedural Safeguards: The judgment disregards procedural safeguards established in previous court rulings, such as the requirement for recording a reasoned order and providing notice to affected parties. This lack of procedural safeguards undermines transparency, accountability, and the protection of freedom of speech and expression.

- Unchecked State Power: Granting the state unfettered power in content takedowns without proper oversight or recourse raises concerns about abuse and arbitrary censorship. It allows the state to remove content without clear justifications, potentially stifling dissenting voices and limiting the diversity of opinions.

- Restricting Online Discourse: By restricting the ability of users and intermediaries to challenge content takedowns, the judgment curtails the online discourse and hampers the democratic values of open discussion and exchange of ideas on digital platforms.

- Disproportionate Impact on Digital Rights: The judgment’s disregard for procedural safeguards and expanded grounds for content takedowns disproportionately affect digital rights. It impedes individuals’ ability to freely express themselves online, limiting their participation in public discourse and impacting the vibrancy of the digital space.

Way forward

- Strengthen Procedural Safeguards: It is essential to reinforce procedural safeguards in the process of blocking content. Clear guidelines should be established, including the provision of notice to affected users and conveying reasons for blocking. This ensures transparency, accountability, and the opportunity for affected parties to challenge the blocking orders.

- Uphold Judicial Precedents: It is crucial to adhere to established judicial precedents, such as the principles outlined in the Shreya Singhal case. Courts should interpret laws relating to freedom of speech and expression in a manner consistent with constitutional values, protecting individual rights and ensuring a robust and inclusive public discourse.

- Review and Amend Legislation: There may be a need to review and amend relevant legislation, such as Section 69A of the Information Technology Act, to address the concerns raised by the judgment. The legislation should clearly define the grounds for blocking content and ensure that restrictions are based on constitutionally permissible grounds, protecting freedom of speech while addressing legitimate concerns.

- Promote Digital Literacy: Enhancing digital literacy among citizens can empower individuals to navigate online platforms responsibly, critically evaluate information, and exercise their freedom of speech effectively. Educational initiatives can focus on teaching digital literacy skills, media literacy, and responsible online behavior.

- Encourage Public Discourse and Open Dialogue: It is important to foster an environment that encourages open discourse and dialogue on matters of public interest. Platforms for discussion and debate should be facilitated, providing individuals with opportunities to express their opinions, share diverse perspectives, and engage in constructive conversations.

- International Collaboration: Collaboration with international stakeholders and organizations can contribute to promoting and protecting freedom of speech and expression in the digital realm. Sharing best practices, lessons learned, and cooperating on global norms and standards can strengthen the protection of these rights across borders

Conclusion

- The Karnataka High Court’s judgment undermines procedural safeguards, erodes the principles of natural justice, and grants unchecked power to the state in removing content it deems unfavorable. This ruling, coupled with the recently amended IT Rules on fact-checking, endangers free speech and digital rights. It is crucial to protect and uphold the right to free speech while ensuring that restrictions are justified within the confines of the Constitution

Get an IAS/IPS ranker as your 1: 1 personal mentor for UPSC 2024

Social Media: Prospect and Challenges

Deepfakes: A Double-Edged Sword in the Digital Age

Central Idea

- Deepfakes, produced through advanced deep learning techniques, manipulate media by presenting false information. These creations distort reality, blurring the lines between fact and fiction, and pose significant challenges to society. While deepfakes have emerged as an “upgrade” from traditional photoshopping, their potential for deception and manipulation cannot be underestimated

What is mean by Deepfakes?

- Deepfakes refer to synthetic media or manipulated content created using deep learning algorithms, specifically generative adversarial networks (GANs).

- Deepfakes involve altering or replacing the appearance or voice of a person in a video, audio clip, or image to make it seem like they are saying or doing something they never actually did. The term “deepfake” is a combination of “deep learning” and “fake.

- Deepfake technology utilizes AI techniques to analyze and learn from large datasets of real audio and video footage of a person.

The Power of Deepfakes

- Manipulate Media: Deepfakes can convincingly alter images, videos, and audio, allowing for the creation of highly realistic and deceptive content.

- Blur Reality: Deepfakes can distort reality and create false narratives, blurring the lines between fact and fiction.

- Transcend Human Skill: Deepfakes go beyond traditional methods of manipulation like photoshopping, utilizing advanced deep learning algorithms to process large amounts of data and generate realistic falsified media.

- Produce Real-Time Content: Deepfakes can be generated in real-time, enabling the rapid creation and dissemination of manipulated content.

- Reduce Imperfections: Compared to traditional manipulation techniques, deepfakes exhibit fewer imperfections, making them more difficult to detect and debunk.

- Spread Misinformation: Deepfakes have the potential to spread misinformation on a large scale, influencing public opinion, and creating confusion.

- Exploit Facial Recognition: Deepfakes can be used to manipulate facial recognition software, potentially bypassing security measures and compromising privacy.

- Create Illicit Content: Deepfakes have been misused to generate non-consensual pornography (“revenge porn”) by superimposing someone’s face onto explicit material without their consent.

- Influence Elections: Deepfakes can be employed to create videos that depict political figures engaging in inappropriate behavior, potentially swaying public opinion and impacting election outcomes.

- Persist in Digital Space: Once released, deepfakes can continue to circulate online, leaving a lasting impact even after their falsehood is exposed.

Positive applications of deepfakes

- Voice Restoration: Deep learning algorithms have been employed in initiatives like the ALS Association’s “voice cloning initiative.” These efforts aim to restore the voices of individuals affected by conditions such as amyotrophic lateral sclerosis, providing a means for them to communicate and regain their voice.

- Entertainment and Creativity: Deepfakes have found applications in comedy, cinema, music, and gaming, enabling the recreation and reinterpretation of historical figures and events. Through deep learning techniques, experts have recreated the voices and/or visuals of renowned individuals

- Visual Effects and Film Industry: Deepfakes have been utilized in the film industry to create realistic visual effects, allowing filmmakers to bring fictional characters to life or seamlessly integrate actors into different environments.

- Historical and Cultural Preservation: Deepfakes can aid in preserving and understanding history by recreating historical figures or events. By using deep learning algorithms, experts can breathe life into archival footage or photographs, enabling a deeper understanding of the past and enhancing cultural preservation efforts.

- Augmented Reality and Gaming: Deep learning techniques are employed to create immersive augmented reality experiences and enhance gaming graphics. By generating realistic visuals and interactions, deepfakes contribute to the advancement of these technologies, providing users with captivating and engaging virtual experiences.

- Medical Training and Simulation: Deepfakes can be used in medical training and simulation scenarios to create lifelike virtual patients or simulate medical procedures. This allows healthcare professionals to gain valuable experience and enhance their skills in a controlled and safe environment.

The path to redemption regarding deepfakes

- Regulatory Framework: Implementing comprehensive laws and regulations is necessary to govern the creation, distribution, and use of deepfakes. These regulations should address issues such as consent, privacy rights, intellectual property, and the consequences for malicious actors.

- Punishing Malicious Actors: Establishing legal consequences for those who create and disseminate deepfakes with malicious intent is essential. This deterrence can discourage the misuse of this technology and protect individuals from the harmful effects of false and manipulated media.

- Democratic Inputs: Including democratic input in shaping the future of deepfake technology is crucial. Involving diverse stakeholders, including experts, policymakers, and the public, can help establish guidelines, ethical frameworks, and standards that reflect societal values and interests.

- Digital Literacy and Education: Promoting scientific, digital, and media literacy is essential for individuals to navigate the deepfake landscape effectively. By equipping people with the critical thinking skills necessary to identify and analyze manipulated media, they can become empowered consumers and contributors to a more informed society.